Software development productivity is the ratio between the value of software produced to the expense of producing it. It can be increased both by driving up the value of the output created by a software organization and by reducing costs of developing software. Many discussions on the software productivity have focused on individual developers. Measuring developer productivity has proven to be a difficult problem to solve (see CannotMeasureProductivity by Martin Fowler). Part of the problem has been preoccupation with code, the output developers produce, instead of outcome, the benefits derived from software. Those benefits are typically the results of large team efforts and focusing on the software engineering organization impact at a macro instead of an individual level is a better path to understanding and influencing productivity. From my experience, the best way to drive software productivity is engineering and product/project management leadership focus in the following areas:

Working on the right stuff

Driving productivity starts with a clear vision, direction and goals. Productivity of even the best team will be zero if their efforts are misplaced, e.g. if the software they develop will not be adopted. The vision should clearly articulate expected benefits. Having transparent goals has an added benefit of enabling individual and team creativity in finding the best ways to achieve the goals.

Keeping a steady hand on the wheel

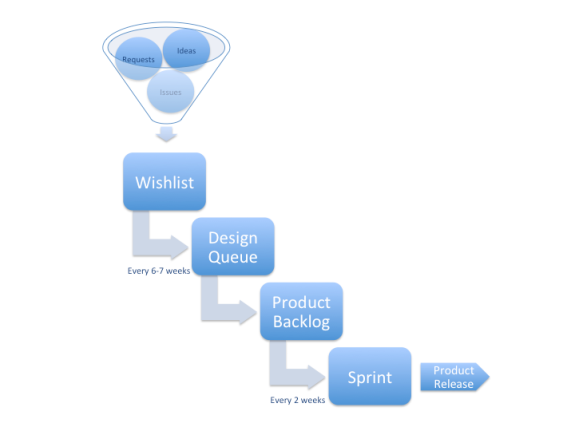

A common anti-pattern in product/project management is frequent, unwarranted changes in priorities. Direction changes are expensive. They result in a throw away work which by definition has no value, add an overhead of context switching (ramping down on one activity and up on another takes time) and impact team morale. Software engineering organizations need to be agile, changes are expected and frequently good. Agility should not however be mistaken with pointing teams in different directions as a reaction to a stream of random requests and ideas or creating an emergency due to a lack of foresight. Please refer to my earlier blog post on an Agile Product Management Process for an example of how to manage change in a productive way.

Minimizing operational chores

Time spent on reacting to operational issues and fixing bugs creates no incremental value. Reducing it has multiple benefits. It frees time for innovation, i.e. creates an opportunity for the team to produce more, energizes the team members and makes it easier to attract talent (developers prefer working on a new rather than maintaining the old code).

There is more to driving productivity. Development processes and tools, increased reuse, better working environment etc. can all help. From my experience however the biggest impact comes from having a clear, value oriented strategy and minimizing the unproductive time. It boils down to a strong engineering and product/project management leadership.